Surevine work almost entirely in Amazon’s “Elastic Compute Cloud” (EC2). This means we don’t need to have our own building somewhere with a pile of servers and networking. It doesn’t mean that we don’t need to think about how we use that compute resource. Like everything else it suffers from supply and demand problems. Even something as big as Amazon’s cloud is subject to peaks and troughs.

(According to Gartner, Amazon have 5 times more capacity than the aggregate of their 14 rivals)

But how can this affect us as “users”?

Well, recently, one of my colleagues posted on our corporate chat room that he couldn’t start up a c1.xlarge instance.

To explain that a bit, here is some background. If you already use Amazon and understand their pricing and sizing, skip down a few lines!

Amazon use various prefixes and words to mean different sorts of virtual machine (“instance” being Amazon’s term for what VMware call a “guest”.)

In this case, c1 means compute optimised and large means about average. You can have m1 machines that are general purpose, m2 means memory optimised, etc…

Large can also be medium or small or xlarge, in 2,4 or 8 multipliers.

They don’t offer all combinations. See what they do offer yourself here.

Amazon have done some clever calculations and decided how much each of these instances should cost you per hour. That pricing can be seen here.

The “normal” way to pay is simply, you pay that rate per hour your machine is running. You stop the machine, you stop paying. Start it up and it costs money again.

If you were skipping down, you might want to stop now. Unless you don’t know what “Spot pricing is”.

“Spot pricing” is like eBay for EC2. You make a bid of how much you are prepared to pay for your compute time, then if the price is lower than that, you get it. If it goes above your bid, your machine shuts down.

This offers significant price reduction for you if your workload can tolerate that sort of handling. We don’t use it ourselves because developers get annoyed when the machine they’re writing code on shuts down without warning (you don’t have to be a developer to be annoyed by that!) So we use the normal “on-demand” pricing model.

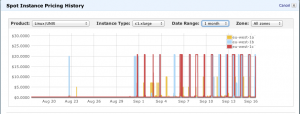

However, the “Spot Pricing” area of Amazon does show you a graph of the price history for your chosen type of machine, so you can decide where to put your bid. In the EU data centre, it’s always looked pretty stable when I’ve checked with very few peaks and this does seem to be a good deal.

Now is the time to stop skipping, I hope this is the entertaining bit!

Checking the price of a c1.xlarge shows that it has been having some serious peaks lately with the price running at about $20 per hour most of the time. That suggests a serious shortage of resource and someone making excessive use of it.

For about the last 15 days someone has been using a lot of c1.xlarge. So the “spot” price has gone from below the normal price of $0.66 / hour to $20.00 per hour. Some of those peaks don’t go all the way to the top, which suggests that someone is paying that price, then more people start instances and the price goes up even more.

Now, if this was simply a value based on available resources, you might think that overall CPU time or memory etc.. would get more expensive but no, if you check an m2.4xlarge, the price has remained pretty flat :

In fact, you can run an m2.4xlarge for $0.38 /hour on spot pricing, which is 50% cheaper than a c1.xlarge at normal price.

Why do I mention an m2.4xlarge? Because it offers more CPU and RAM than a c1.xlarge :

| Instance Family | Instance Type | Processor Arch | vCPU | ECU | Memory (GiB) | On-demand Price |

| Compute optimized | c1.xlarge | 64-bit | 8 | 20 | 7 | $0.66 |

| Memory optimized | m2.4xlarge | 64-bit | 8 | 26 | 68.4 | $1.84 |

So, if you didn’t use spot pricing for this workload and instead used a slightly bigger machine with on-demand pricing, you’d get more capacity at a reduced rate! Looking at the graph, the workload looks fairly predictable with peaks from about 14:00 to 21:00.

If your workload is already designed to cope with being switched off and on, then you can simply fire up some of the other sort of instance and run it there.

Presumably, someone somewhere is starting these c1.xlarge instances with a script. What they need to do is rewrite it to check the price of the different instance types and take full advantage of EC2’s flexibility.